BirdNET-GO is a newer BirdNET-based project.

Recently I have adquired a Raspberry pi 4 and decided to upgrade my BirdNet-Pi station Entrelomas to BirdNET-Go in the same location.

Real-Time Soundscape Analysis: Exploring BirdNET-Go and Custom Data Logging

I want to start with a massive shout-out to the developers who made this all possible. My deepest thanks go to Tomi P. Hakala, who built BirdNET-Go on top of the core BirdNET project, and to mcguirepr89 for his foundational work on the original BirdNET-Pi project, now directed by Nachtzuster on which I’ve relied on extensively.

Without the tireless efforts of these two incredible developers and all the many project contributors! my journey into birding and biodiversity monitoring as a citizen scientist simply wouldn’t have been possible.

Introducing BirdNET-Go: A High-Performance Acoustic Monitoring Tool

BirdNET-Go is a high-performance, continuous classification tool written in Go that acts as a real-time soundscape analyzer for avian vocalizations. Leveraging Artificial Intelligence, it’s designed for efficiency and built on the powerful academic work of the BirdNET project, while drawing architectural inspiration from BirdNET-Pi, which I used extensively as in this post.

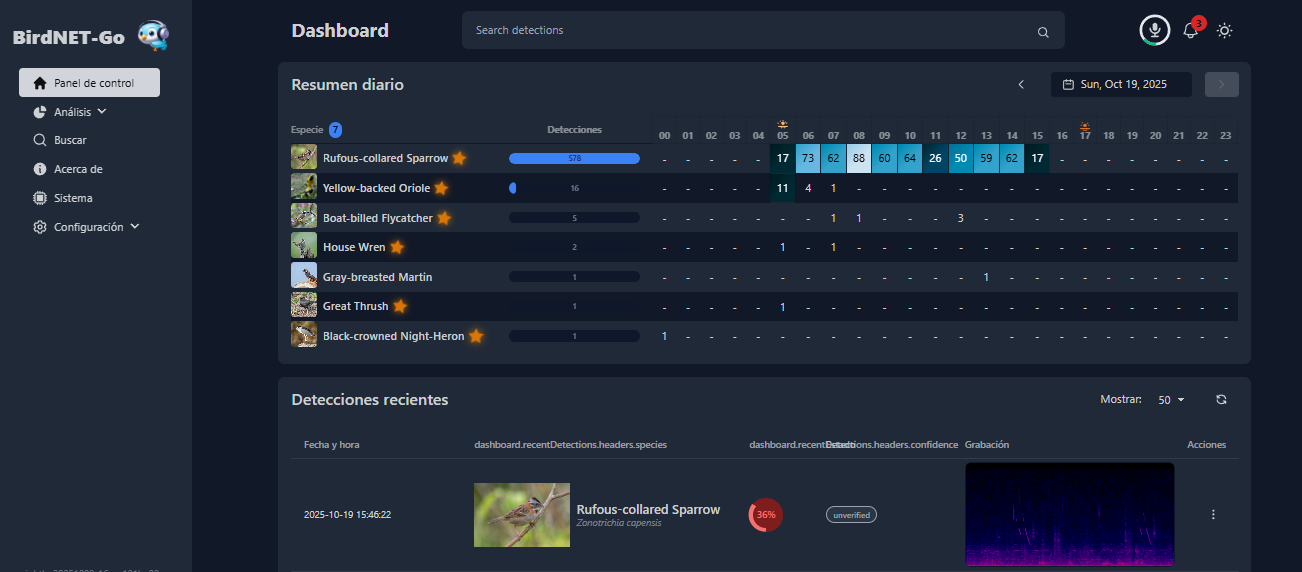

Sleek Interface and Critical Integrations

BirdNET-Go provides a clean, modern web interface where users can easily view detected birds, analyze their frequencies, and play back captured recordings.

It also includes a streamlined, resource-light analytics dashboard, compared with the one in BirdNET-Pi that takes much longer to load. I also love the posibility to export the data as a csv file.

However, the most interesting feature, especially for home users, is its seamless integration with Home Assistant via MQTT. It supports audio streams directly via RTSP, which is the standard protocol for streaming video and audio from common IP cameras. This allows users to turn existing security hardware into a powerful, real-time acoustic monitoring station.

The Game-Changer: Soundscape Context

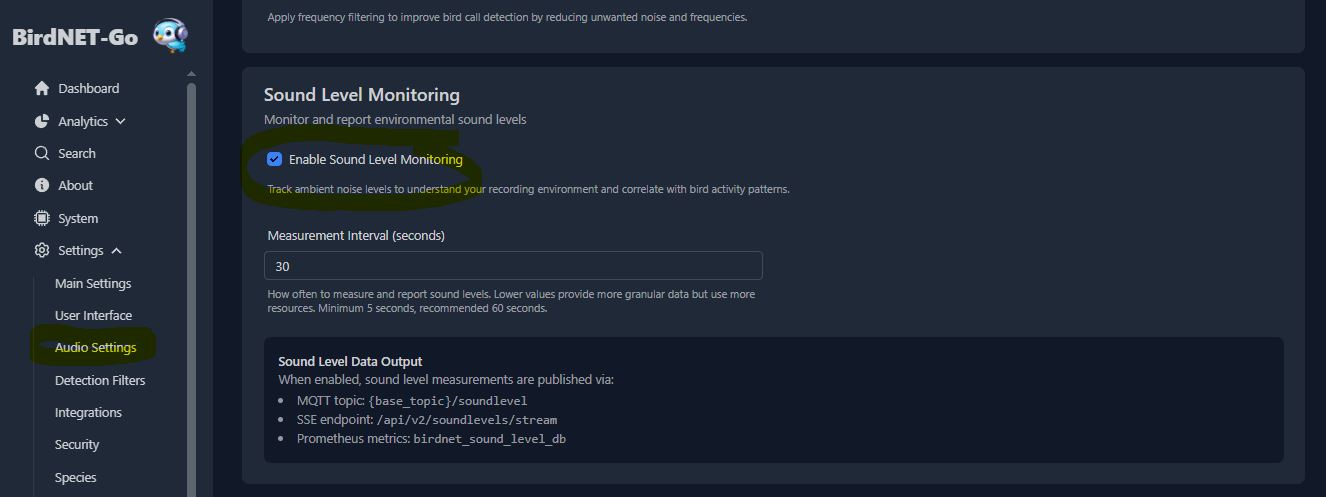

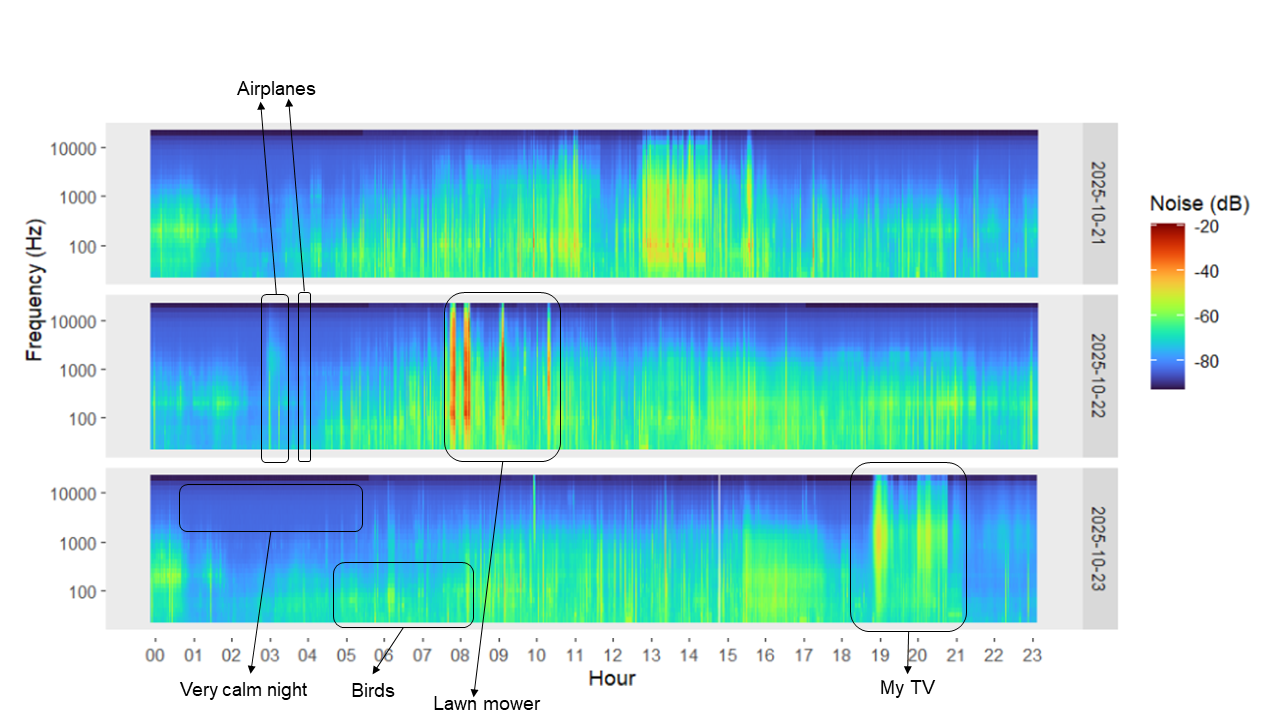

Beyond simple species identification, BirdNET-Go includes a powerful feature called Sound Level Monitoring. This is crucial for understanding the acoustic context of the environment, which helps in filtering unwanted noise and provides invaluable sounscape data for researchers.

The system registers sound levels in 1/3 octave bands, following the ISO 266 standard, providing a detailed frequency breakdown of the soundscape. This data can be useful to calculate the Noise Criterion (NC) - level. The data is recorded as frequently as every ten seconds, offering continuous, advanced audio monitoring that gives a far richer picture of the overall soundscape than just a list of bird names.

Notice I selected to get data each 30 seconds and not each 10 as default.

The Data Challenge: Optimizing for a Home Server

While this Sound Level data is incredibly valuable, accessing it required a solution tailored to my home lab server, which is an old Dell laptop running Home Assistant in a virtual machine of Proxmox. By the way here I got the inspiration to build it.

Sound level data from BirdNET-GO can be published via MQTT or accessed through a Prometheus-compatible endpoint, and it’s typically consumed by databases such as InfluxDB for high-resolution time series, and for its visualization is common to use tools like Grafana.

But dedicating that much processing power and space to a separate database in InfluxDB and for Grafana to visualize wasn’t a viable option for my Raspberry-Pi 4 or my old laptop as home server. To keep the resource load minimal, I decided on a low-tech, simple and diferent approach:

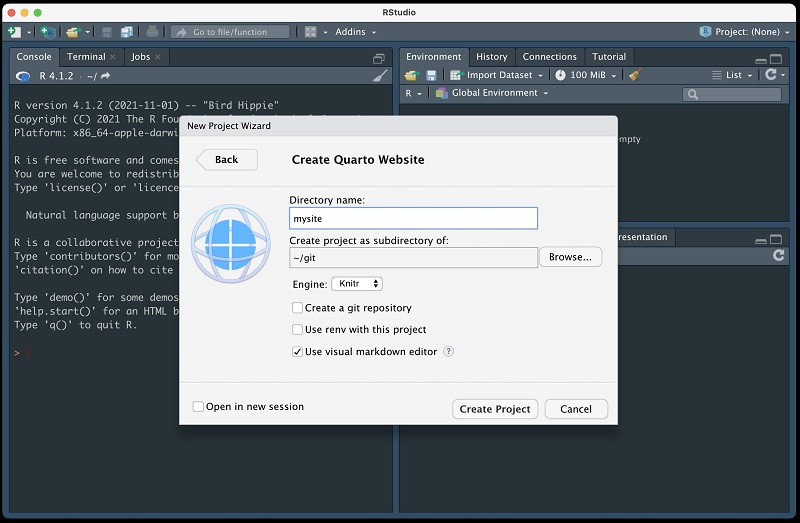

I leveraged BirdNET-Go’s tight integration with Home Assistant and created a simple automation to capture the necessary sound level attributes and write them directly to a local CSV file. This file now serves as my lightweight data repository, ready for deeper analysis and visualization using R.

The inspiration to integrate Home Assistant with BirdNET-GO came from Kyle Niewiada.

This is what you need:

1. Setup:

Save this Python script to: /config/scripts/save_audio_data.py.

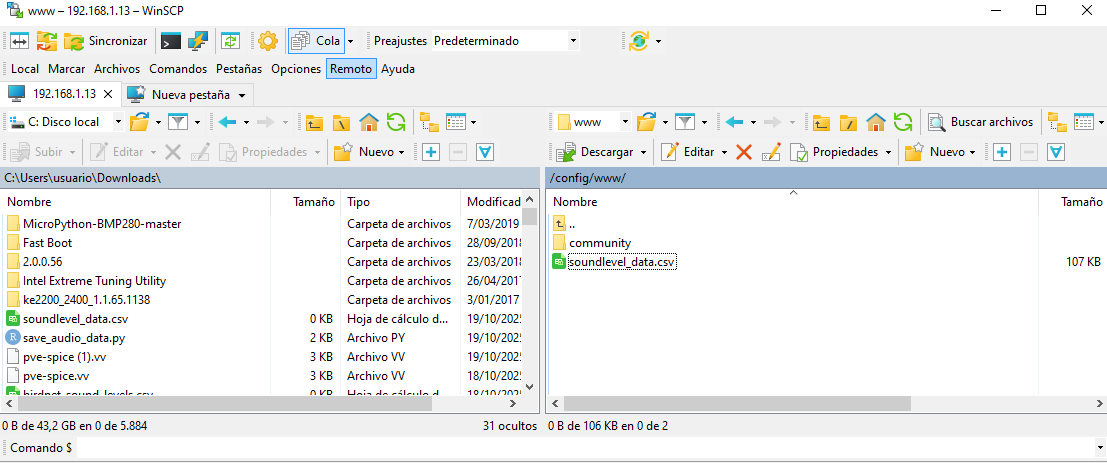

This script reads the JSON data embeeded in the MQTT, extracting the time, frequency, noise, mean and maximum data, and save it as a new row in the soundlevel_data.csv file. Notice the file path at /config/www/.

2. Add this to configuration.yaml in Home Assistant

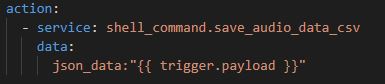

3. Add this automation in Home Assistant.

For that go to: Settings → Automations → Create Automation → Edit in YAML, then paste the automation code.

Notice the topic: birdnet-go/soundlevel should be the same topic you configure in BirdNET-GO. Also notice the last line includes inside the “” the word trigger.payload surrounded by double bracket {}. For some reason GitHub prevent to visualize it properly.

4. Restart Home Assistant

Voila!!! you have a csv file that grows by twenty rows each minute storing sound levels.

To download the csv data

I use WinSCP to make the file trasnsfer from and to my home server.

Of course BirdNET-GO is still a work on progress and its down side is that if you use an older, or diferent browser to Chrome, the visualization can freeze.

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Leave a comment